Data Streams are a critical component in Salesforce Data Cloud, enabling you to ingest, transform, and unify data from various sources like Salesforce, Marketing Cloud, cloud storage, and third-party APIs. They play a key role in delivering clean, real-time customer insights.

This blog post explains what Data Streams are, how they work, their architectural layers, use cases, and best practices for professionals working with Salesforce Data Cloud.

What is a Data Stream?

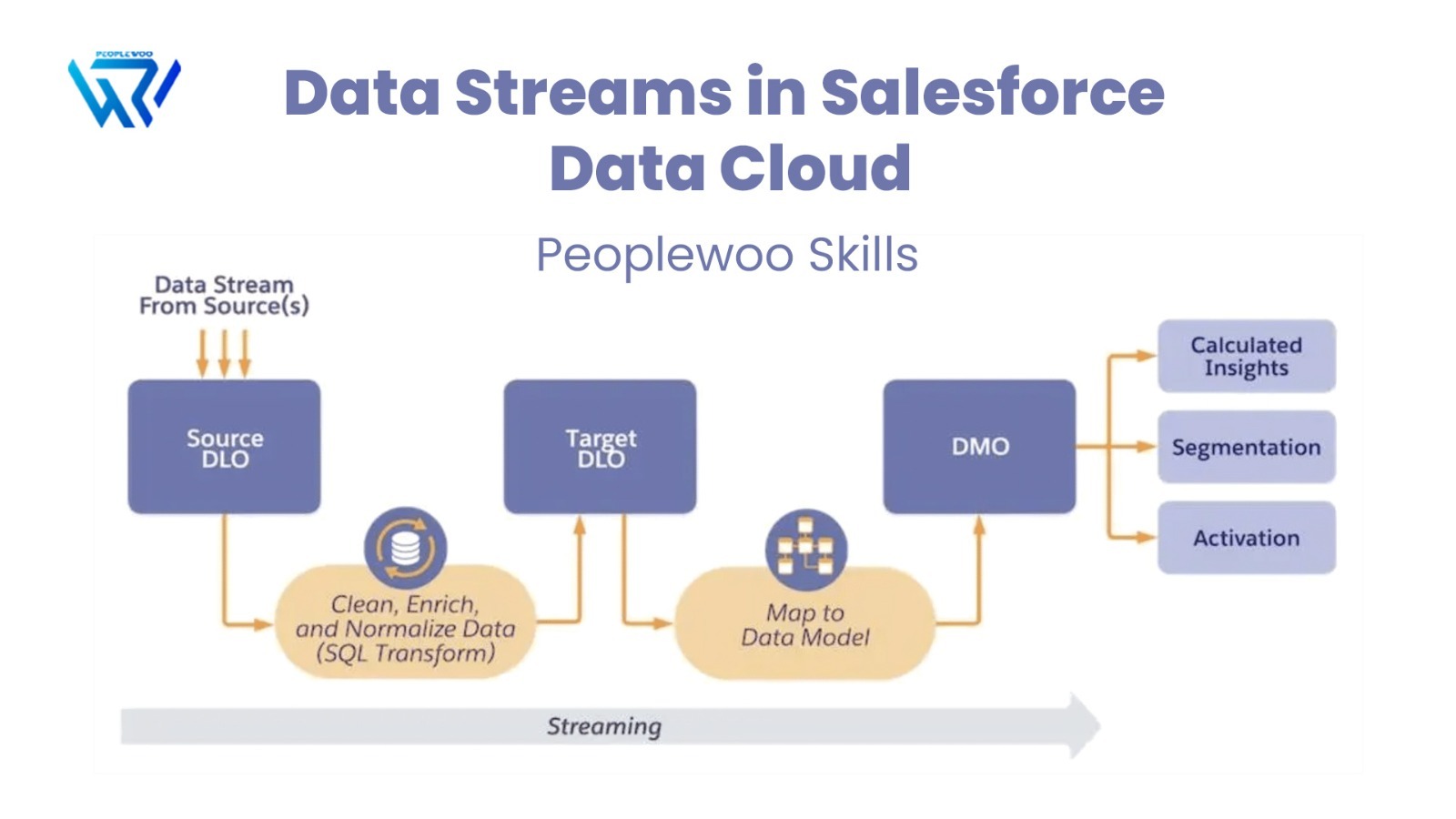

A Data Stream is a pipeline that pulls data from your source system into Salesforce Data Cloud and maps it to standardized Data Model Objects (DMOs). This allows Data Cloud to process, harmonize, and unify the information across all your touchpoints.

Watch Our Full Tutorial Video

Salesforce Data Cloud Architecture

The diagram below shows the architecture of how data flows through Salesforce Data Cloud layers, from ingestion to identity resolution.

Supported Data Stream Sources

| Source Type | Description | Example |

|---|---|---|

| Salesforce CRM | Standard & custom object data from Sales or Service Cloud. | Leads, Contacts |

| Marketing Cloud | Engagement & journey data from Email Studio and Journey Builder. | Email sends, opens |

| Cloud Storage | CSV/JSON ingestion via Amazon S3, Google Cloud, or Azure. | Event logs |

| Web & Mobile SDK | Behavioral event tracking from websites and apps. | Page views, product clicks |

| External APIs | Third-party or custom data ingestion via MuleSoft or ETL. | POS, Loyalty, ERP data |

Inside the Architecture of a Data Stream

- Ingestion Layer: Pulls data from Salesforce, S3, APIs, and SDKs.

- Transformation Layer: Maps data fields to Data Model Objects (DMOs).

- Data Harmonization: Standardizes values, formats, and data types for consistency.

- Identity Resolution: Unifies customer records using matching rules.

- Unified Profile: Creates a golden record for segmentation and analytics.

Advanced Features of Data Streams

- Delta Updates: Processed only changed records, reducing processing time.

- Ingestion Logs: Access detailed job logs, record counts, and error messages.

- Retention & Expiry: Define automatic deletion for outdated datasets.

- Preview & Validation: Inspect sample data and mappings before activation.

Real-World Use Cases

| Industry | Sources | Use Case |

|---|---|---|

| Retail | POS, Ecommerce, SFMC | Unify customer journey across store and digital channels. |

| Banking | Core banking + email engagement | Trigger personalized offers based on account activity. |

| B2B SaaS | Salesforce CRM, Helpdesk, Web | Track full lifecycle from lead to renewal and upsell. |

How to Test and Validate Data Streams

- Use Preview Data to verify incoming records before activation.

- Run the Field Mapping Validator to ensure schema compatibility.

- Monitor the Status Dashboard for ingestion failures or alerts.

- Test Segment Updates after ingestion to validate downstream flow.

Learn Data Cloud Hands-On

Master real-world data stream setups, transformation rules, and identity stitching in our instructor-led Salesforce Data Cloud course.

Troubleshooting & Common Issues

- Null primary keys? Records won’t unify — check your source mappings.

- File schema mismatch? Ensure CSV/JSON headers match DMO configuration.

- Frequent ingestion errors? Review logs and retry after correcting data source issues.

- Identity collision? Manage conflicting identity matches using confidence scores.

Why Learn Data Cloud with Peoplewoo Skills?

- Live, instructor-led training with real-time practice

- Hands-on experience with Salesforce Data Cloud projects

- Access to sandbox orgs and datasets

- Free demo and career support

- Preparation for Salesforce certification

Frequently Asked Questions (FAQ)

Conclusion

Data Streams are the backbone of Salesforce Data Cloud, connecting data from CRM, marketing, web, and external systems into a unified ecosystem. By leveraging advanced ingestion, harmonization, and validation features, organizations can build a single source of truth for smarter segmentation and personalization.

Peoplewoo Skills helps you master Salesforce Data Cloud with live training, hands-on demos, and real-world projects that prepare you for certification and consulting roles.

More SFDC Resources

Start your SFMC journey today — join our Live Training or learn at your own pace with our Udemy Course.

Need help? Chat with us on WhatsApp anytime.

Learn. Practice. Get Certified. Succeed with Peoplewoo Skills.